今天在写爬虫的时候遇见了如下错误:

![Max retries exceeded with url: https://******... (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1129)')))"))}Python requests 异常](https://www.linfengnet.com/wp-content/uploads/2024/01/2024010606414043.png)

Max retries exceeded with url: https://******... (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1129)')))"))}Python requests 异常![Python requests 异常Max retries exceeded with url: 请求地址… (Caused by SSLError(SSLCertVerificationError(1, '[SSL: CERTIFICATE_VERIFY_FAILED] certificate verify failed: unable to get local issuer certificate (_ssl.c:1129)')))"))}翻译](https://www.linfengnet.com/wp-content/uploads/2024/01/2024010606285333.png)

错误大概意思应该是:URL超过了最大重试次数,证书验证失败。

这个错误很好解决,我们在requests请求的地方的加上verify=False参数既可以解决。

如下

import requests

import logging

logging.captureWarnings(True)

r = requests.get(url, timeout=15, verify=False)你可以看到上面示例代码中还有两句:

import logging

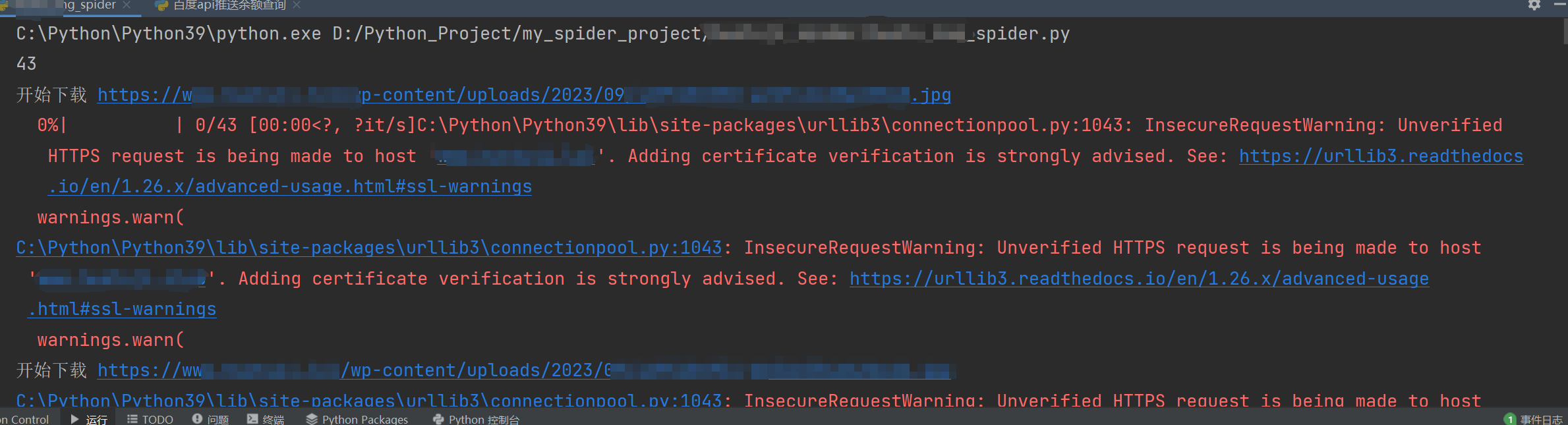

logging.captureWarnings(True)这是因为如果不加上的话 会出现如下警告信息:

C:\Python\Python39\lib\site-packages\urllib3\connectionpool.py:1043: InsecureRequestWarning: Unverified HTTPS request is being made to host ‘doamin.com’. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.io/en/1.26.x/advanced-usage.html#ssl-warnings

warnings.warn(

所以加上logging.captureWarnings(True)就不会弹出警告信息了。

关于Python requests verify参数详细介绍和相关文章,可以看我之前的文章: